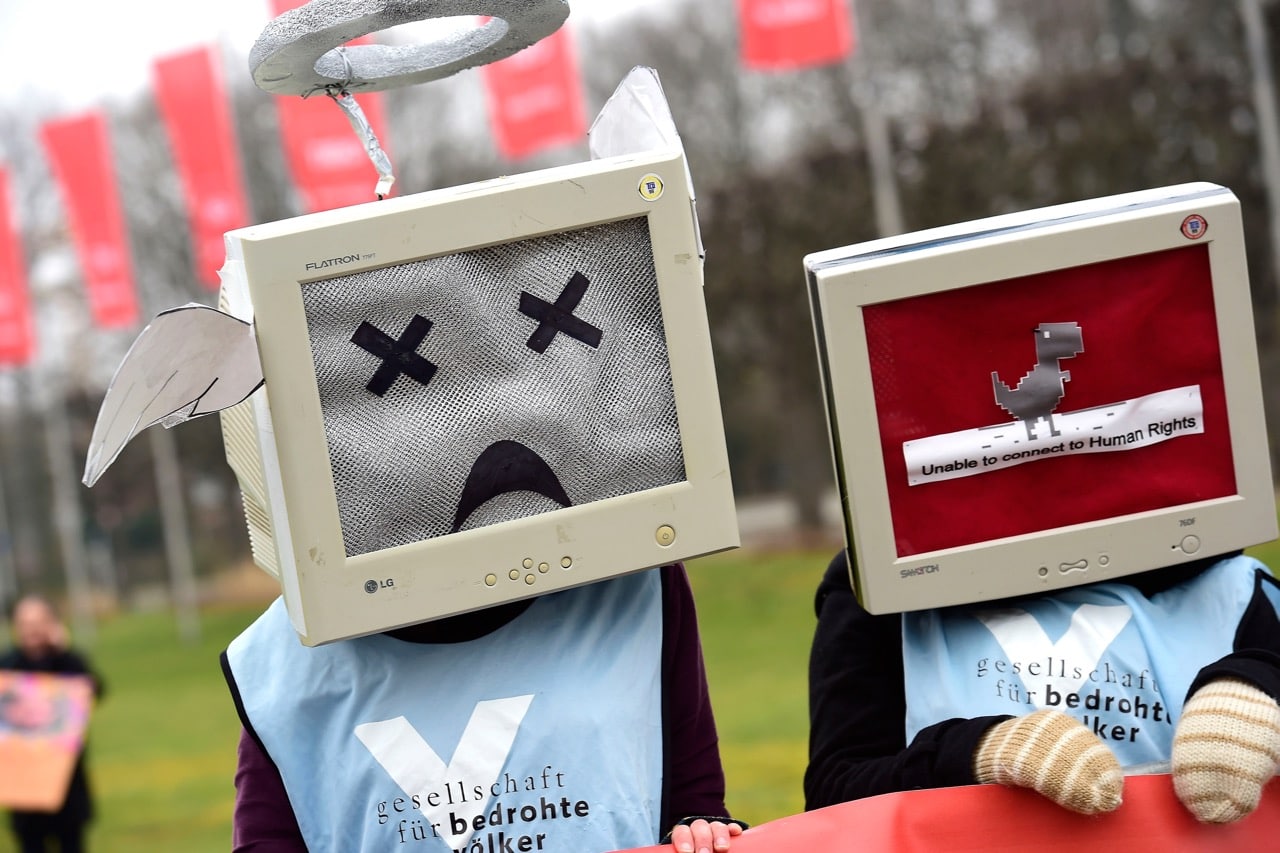

The technology that blocks and filters the internet, explained.

Online expression is blocked, filtered and throttled in a variety of ways by those seeking to suppress it. What does developing a basic technical understanding of how this censorship is implemented have to offer you? For expression and human rights defenders, knowing the basic options will both make your policy advocacy with those imposing the censorship more targeted and effective, and help you identify the appropriate censorship evasion technology for getting around the censorship you’re facing.

This explainer will equip you with this core understanding of the many technical strategies used to deny access to websites and applications, from keyword filtering to deep packet inspection.

Blocks

Blocking and filtering are amongst the most common web censorship techniques. Some censorship efforts target websites, while others make applications and services inaccessible. Some blocking and filtering is compatible with the provisions of international human rights law, in which narrowly-defined limits on expression protect society from clearly-defined harms – but many blocks and filters are not.

Blocked content is a targeted approach that renders a website or service inaccessible for a specific set of users, typically based on geographic location. A block is often put in place with the cooperation of local internet service providers (ISPs), who prevent their end users from being able to make a connection to the content or service. For example, a government agency might instruct an ISP in their jurisdiction to block an entire domain, or a specific website address. Similarly that instruction could be to block access to WhatsApp.

There are two main ways that this is achieved: IP blocking and DNS blocking.

IP blocking prevents data from being requested or transmitted simply based on their source or destination. Every device that connects to the internet – phones, computers, ‘smart’ appliances and so on – has an internet protocol (IP) address assigned to it. An IP address is also assigned to every machine or server that is providing content or services on the internet. Since IP addresses are assigned sequentially based on geographic location, IP blocking can lead to entire regions (via their IP ranges) being censored.

The domain name system (DNS) is an addressing system that locates the IP address of websites and services, and relates them to the URLs we commonly use to navigate the web. Domains, like example.com, are controlled by the domain owner. DNS blocking results in the inability to resolve specified domain names or in the resolution of a specified domain to an incorrect IP address. DNS blocks are very common and are easily employed to apply legal and judicial decisions by states, by forcing ISPs to implement DNS block lists. (For example, the UK blocks hundreds of thousands of domains!) DNS blocks are implemented by ISPs. If a DNS block is in place, when one attempts to access a website by typing the website address, the DNS resolver of the ISP might: 1) not return the IP address hosting the website, 2) pretend that it cannot find the server, or 3) return a site with another IP address that hosts a warning message, for example.

Filters

Filtering content is a more general approach to restricting expression that seeks to prevent access based on defined characteristics about the content being accessed, such as the use of particular words or apparent features of image content. Filtering software can be placed anywhere in the network, from a library router to undersea cable landings. This approach can be less time-consuming and more broadly effective for censorship implementers, as it can target internet content that has never been reviewed by a human moderator. For the same reason, filtering software also frequently over-censors, for example blocking scholarly discussion of terrorism while attempting to limit access to hate speech, or restricting access to sexual health education material while attempting to prevent access to pornography.

There are many, many ways that filtering can be applied. A few of the most common filtering techniques are:

Content filtering: All data traffic passing through a router is readable by whomever or whatever controls the router. If the connection is not encrypted it’s possible to read the content of websites accessed, sent emails and any other traffic. Thus it becomes possible to filter all pages that contain certain words. This technique can be used in public spaces, by parents, as well as on routers at ISP level. Encryption like the HTTPS standard make this type of content control more difficult.

URL Filtering: Even when encryption is used, the metadata and basic routing information of our website requests are visible to the router. In a similar manner to content filtering, this technique scans URLs for specific keywords and blocks them.

Packet Filters: Packet filters are implemented on routers or servers to read packet headers, or metadata. Packet filters were designed to search for protocol non-compliance, viruses, spam or intrusions. If outgoing or incoming packets match the search criteria the filter may route those packets to a different destination, record the incident in logs, or silently drop them. While this feature can protect networks from attacks, packet filtering can easily be used for content control.

Deep Packet Inspection: Deep packet inspection (DPI) works in a similar way to packet filtering, but instead of simply looking at packet headers, DPI also reads the data within the packets. While this monitoring technique, like packet filtering, can be useful to identify, monitor and troubleshoot improperly formatted data or malicious packets, it has an even higher risk of being used for data mining, eavesdropping, internet censorship and net neutrality violations, because the full content of the internet traffic is inspected, not just the metadata. A packet which is marked as suspicious may be redirected, tagged, blocked, rate-limited and reported, or silently dropped.

A universal requirement of filtering is the ability to see some part of what is being requested by end users. Encryption is an effective technique for circumventing many (though not all) filtering techniques – see below for further information.

Other techniques

Censorship regimes often combine both blocking and filtering – but there are even more ways to ensure the internet is censored. Other common techniques include:

Throttling: Also known as degraded or differential service, some services or websites may make access very difficult or impossible for some users. For example, when users of the anonymising tool Tor (The Onion Router) visit some websites they may experience rejection, limitations on their access, or requirements such as CAPTCHA in order to view web content. Other examples include violations of net neutrality in which streaming video is deprioritised or slowed down on the network.

Network shutdowns: All internet routing is handled by the simple yet powerful Border Gateway Protocol (BGP). BGP routing is so fundamental to the internet that small mistakes can have a big impact – manipulating BGP on purpose by publishing wrong network routes or unpublishing correct ones can cut off entire parts of the internet. In 2008, Pakistan incorrectly implemented BGP routing to censor YouTube content, and blocked the website across the entire world for a few hours.

Content and search removal: Publishers, authors and service providers have to comply with legitimate requests or applicable laws to take down, unpublish, unlist or otherwise hide content when required by law or government requests. Under EU Law, for example, the “right to be forgotten” requires search engines to consider requests from individuals to remove links to freely accessible web pages resulting from a search on their name. When content is censored with take-down requests, publishers can choose to pretend that the content has not been found, or, for more transparency, can present the user with the information that the content exists, but that it has been blocked by responding with a 451 status error “blocked for legal reasons”.

When can censorship be legally applied?

There are a number of potential legitimate reasons for censoring content, depending on differing cultural frameworks related to morality and opinion, security, religion, politics or political processes, or economics. For human rights advocates it is mostly accepted that any restriction on the right to freedom of expression adhere to the “three-part test”.

Censorship must be all three:

• Provisioned in law;

• For the purpose of safeguarding a legitimate public interest; and

• Necessary and proportionate to secure this interest [1]

[1] For an elaboration of this test see Goodwin v. United Kingdom, 27 March 1996, Application No. 17488/90, 22 EHRR 123 (European Court of Human Rights), paras. 28-37.

Censorship circumvention

There are many reasons why internet users might want to circumvent censorship and monitoring of their internet use.

DNS proxies and VPNs are two of the most common anti-censorship tools, both of which allow user devices to send their traffic through an intermediary for whom the content is not blocked. Further information on how to evade censorship is available on hubpages developed by EFF and ARTICLE 19.

Censorship circumvention technology will not help all users, however, for several reasons. Sometimes the anti-circumvention service or tool is itself blocked. The use of anti-circumvention techniques can sometimes be detected, and may put the user at risk of punishment by the blocking authorities. Other times the circumvention technology is simply too technical or expensive to employ for the casual internet user.

Advocating at the protocol level

For these reasons there are important advances being made in the low-level architecture of the internet to technically prevent censorship from working. In places where extensive blocking and filtering are less common, these new internet protocols are seen as (and are) privacy protecting. But for people who regularly face censorship, these privacy enhancing protocols will also help reduce censorship. Earlier, it was mentioned that much blocking and filtering is done with DNS. If DNS can be designed to be more privacy protecting and not reveal who you are, where you are located and what website you would like to visit, then it will be impossible to use those methods of censorship to keep you from the content that you would like to access.

There are human rights advocates working with the technical community and internet standards bodies to support these new protocols, both because they protect user privacy and because they make both targeted and blanket censorship much harder.

Conclusion

With so many methods for censoring expression online, it is the case that often multiple techniques are used simultaneously or even in series from the easiest to most difficult method of censorship. Techniques for censorship and evasion continue to evolve, and for every action there is often eventually a reaction.

Some useful strategies for circumvention are now no longer available, such as domain fronting – an approach facilitated by “too big to block” companies like Google and Amazon, which allowed applications to appear as if they operate on these companies’ servers, thereby making it costly to block. Domain fronting is no longer possible with Google and Amazon servers as of May 2018.

While some civil society organisations like ACLU, CDT and ARTICLE 19 are working with the technical community to support better protocols, this is a new front of human rights advocacy and there is a growing need to build evidence and sustain engagement with bodies like the Internet Engineering Task Force (IETF). You can learn more about the importance of internet governance fora like the IETF for freedom of speech in Media Development in the Digital Age, and look out for ARTICLE 19’s upcoming book with No Starch Press, A Cat’s Guide to the Internet.

You can get the latest news and view a teaser chapter on Tor here.

Mallory Knodel is Head of Digital at ARTICLE 19.