As the theory goes, policy can create the optimal framework for trust. But, in reality...

This statement was originally published on privacyinternational.org on 1 August 2016.

By Gus Hosein, Executive Director at Privacy International

Privacy can be seen as a reflex of innovation. One of the seminal pieces on the right to privacy as the ‘right to be let alone’ emerged in response to the camera and its use by the tabloid media. Seminal jurisprudence is in response to new surveillance innovations… though often with significant delays.

While one approach would be to say that privacy is a norm and that with modern technologies the norm must be reconsidered and if necessary, abandoned; I think there’s an interesting idea around the question of protecting privacy as a protection of innovation.

Protecting data to protect innovation

At its most conservative, creating the basic laws of the land to ensure that people can have confidence in technologies and transactions is why we have seen the spread of data protection laws – now in over 100 countries – and encryption – now considered essential to commerce. Neither safeguard was easy to establish, as powerful interests have and continue to try to push back against them.

Studies repeatedly show that the lack of confidence in our infrastructure actually inhibits commerce. A recent survey from the Information Commissioner’s Office shows a lack of confidence in industry and government to manage our information so people are taking matters into their own hands. The ICO interprets this as an explanation for the rise of adblockers.

As the theory goes, policy can create the optimal framework for trust. A recent survey of Americans found that a majority of people wanted similar rules applied to all of industry, though the U.S. Congress continues to be unable to move on data protection rules after decades of inactivity with no sign of change on the horizon.

Protecting people’s rights over the innovation that they’re being served

It’s not law alone that people need. People do not transact just because they know there are laws in place. They are likely to want an element of control.

Another recent survey from Pew Research Center found that ‘when presented with a scenario in which they might save money on their energy bill by installing a smart thermostat that would monitor their movements around the home, most adults consider this an unacceptable tradeoff (by a 55% to 27% margin). People do want control over their electricity and most likely want to reduce their costs. But because the data generation is uncontrollable, in this scenario, the concern rises.

Many of the innovations today don’t give people that ability. Most technology development today actually removes the ability for the individual to even know what data is being generated.

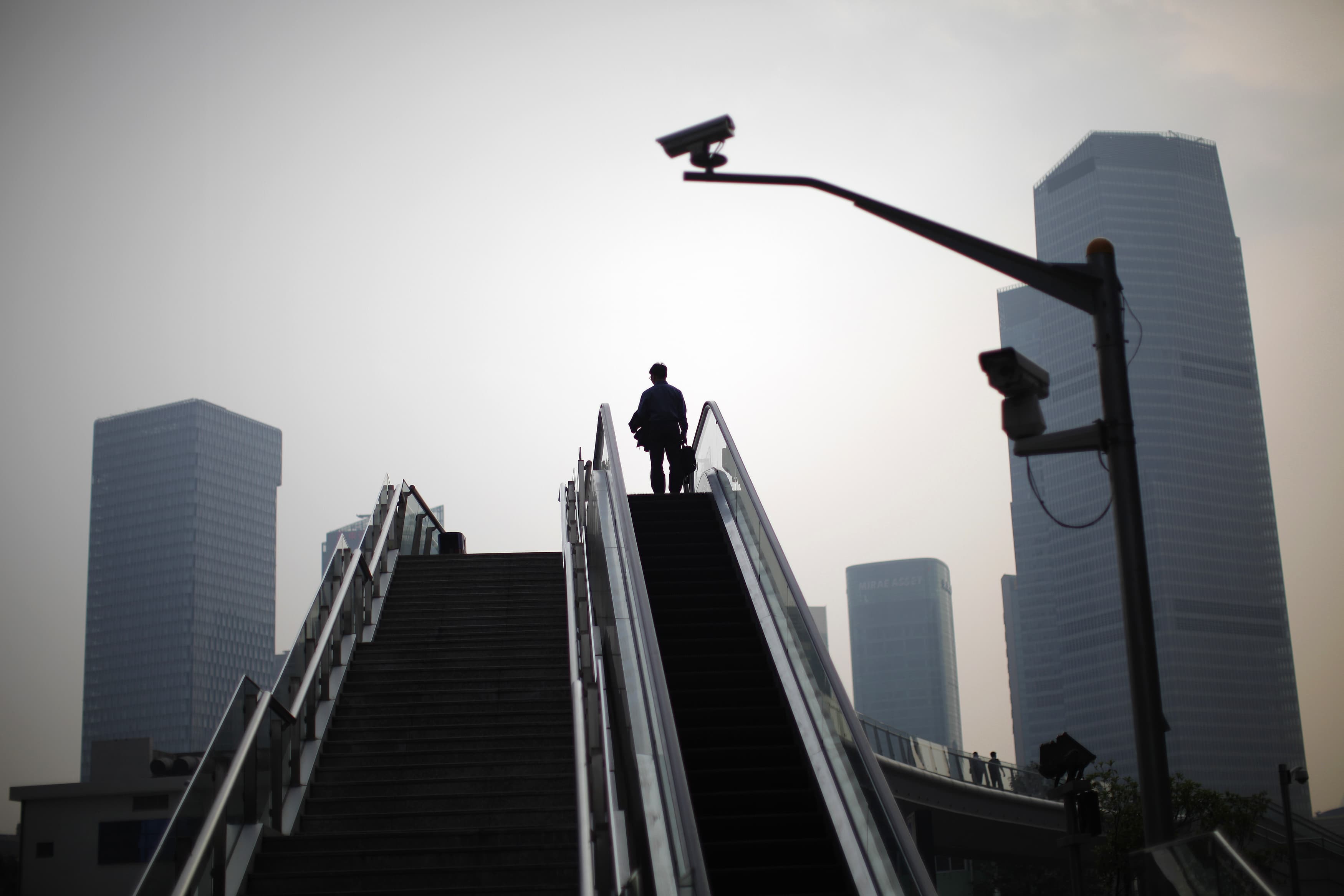

Previously we could understand what was occurring over a telegram, telephone communication, and internet transaction. Now with the numerous sensors and scripts and cookies on our devices and services, we can’t tell what data is being generated and what is happening with that data. Even as you imagine that, you tend to focus on the devices that we actually own; now think about the future smart city and what unknown data is being generated by what unknown technologies owned by which unknown institutions? Today we see signs for CCTV camera; what will we have in the future for all the sensor networks?

Transparency and control are going to be radically different going forward.

Protecting innovation from surveillance

If we want to see a world where there is data everywhere, we need technology to be distributed everywhere. This is the idea that there will be sensors everywhere generating data, collecting data, sharing data. Such a world comes with new potentials but also a myriad of new risks.

Surveillance may actually be one of those risks. I would like to suggest that surveillance laws may chill the spread of these technologies. If we look at the current communications surveillance discourse, it is focused on issues around the internet where the user possesses a mobile phone or a computer. But how do communications surveillance powers apply to the sensor-rich environment, or the Internet of Things? While it is in the realm of our imaginations to allow governments access to our locations and telephone call logs for the purpose of an important investigation, how about these scenarios:

Would we as a society be willing to permit governments to know our movements at all times, here and abroad, monitor our heartbeats, and keep records of all our interactions and what we watch and what we read and to be able to go back in time and see everything we’ve done? This is already the law but rarely discussed publicly in such a way.

Should we allow law enforcement to operate fake websites of our banks, pretend to be our utility providers, our friends, provide fake internet or mobile service so that they can monitor groups of people? They are doing much of this already on a widespread basis with no clear legal framework.

Should we allow any institution to be able to search our lives and conversations and find out on any given moment if we like a given minister, or have a preference that some other interested individual or group may have? This has been done for years, under social media monitoring and GCHQ’s operation Squeaky Dolphin.

Should we ensure that all our personal databases, home CCTV security networks, baby monitors, wearable devices and fitbits, smart TVs and fridges, smart sensors, and all future services have secret back doors that allow for any government anywhere to get access to the information inside? This is all permissible already, according to the British government.

Should we hack into someone’s home even if they’re not a suspect? Hack a transport infrastructure provider? A telecommunications company? An airline? A car? A car manufacturer? Some of this is what governments are already doing and are claiming it is within the law.

Finally, should we ensure that all these capabilities are built into all technologies sold everywhere and even the capabilities of surveillance be made available to any government who seeks it? That’s the state of affairs now with surveillance technology where standards ensure that surveillance capabilities are in there by design.

These are simple examples because all they do is apply existing extraordinary powers to new infrastructure. This is the very same infrastructure that most consider essential to our future economic and social growth. And yet the internet has already been turned into a significant tool for collection and analysis of data by so many third parties. Why should we expect this to not happen again?

In this context, every demand and excitement around data-driven innovation is one that invites an infrastructure whose security and integrity will be undermined for the purpose of surveillance. The logic therefore says that if we want a secure infrastructure where individuals are in control, in order to have the confidence in a society where data is well-governed, then we cannot allow for it to be undermined.

Radical innovation or radical protections?

Innovation today seems to infer anything around the realm of data generation. It’s interesting if we think about some of the concepts in our data world that we wouldn’t have if they were to be invented today. So deep is our excitement and discourse around data innovation that we wouldn’t ever consider creating limitations on collection and the purpose of processing, data protection regulators, limitations on data-sharing, and even warrant regimes. But these all pre-date the data and innovation discourse; how hard are we going to fight to change these risks to innovation, instead of fighting the ones posed by surveillance? Is it possible that the ability to collect and share data only arose because the legal context provided safeguards that the innovators are now removing?

Therefore, it’s noteworthy that I feel like the radical defending privacy in the context of data governance, when the ideas that should be branded as radical are the ones that are trying to undermine all of these practices, protections, norms, and rights we have built up for good reason. Those are the innovations that should run deep, that give rise to all the others.

The future has to be bright: if we want all the things we want, we need the frameworks to provide them and prevent the things that will undermine them. At best, we will be able to develop a new discourse and new safeguards. At worst, we continue the cycle we have long been stuck in: we build it, we take it to market, we promote it, and we act aghast when abuse arises.