Tech companies and online platforms have other ways to address the rapid spread of disinformation, including addressing the algorithmic "megaphone" at the heart of the problem and giving users control over their own feeds.

With measles cases on the rise for the first time in decades and anti-vaccine (or “anti-vax”) memes spreading like wildfire on social media, a number of companies—including Facebook, Pinterest, YouTube, Instagram, and GoFundMe—recently banned anti-vax posts.

But censorship cannot be the only answer to disinformation online. The anti-vax trend is a bigger problem than censorship can solve. And when tech companies ban an entire category of content like this, they have a history of overcorrecting and censoring accurate, useful speech—or, even worse, reinforcing misinformation with their policies. That’s why platforms that adopt categorical bans must follow the Santa Clara Principles on Transparency and Accountability in Content Moderation to ensure that users are notified when and about why their content has been removed, and that they have the opportunity to appeal.

Many intermediaries already act as censors of users’ posts, comments, and accounts, and the rules that govern what users can and cannot say grow more complex with every year. But removing entire categories of speech from a platform does little to solve the underlying problems.

Tech companies and online platforms have other ways to address the rapid spread of disinformation, including addressing the algorithmic “megaphone” at the heart of the problem and giving users control over their own feeds.

Data Voids

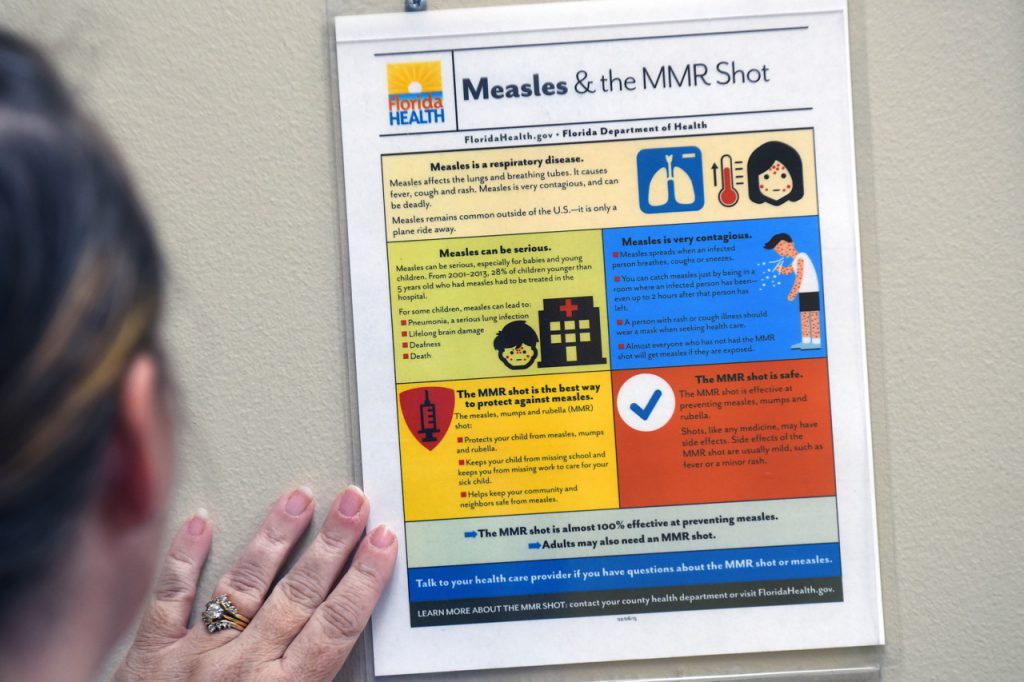

Anti-vax information is able to thrive online in part because it exists in a data void in which available information about vaccines online is “limited, non-existent, or deeply problematic.” Because the merit of vaccines has long been considered a decided issue, there is little recent scientific literature or educational material to take on the current mountains of disinformation. Thus, someone searching for recent literature on vaccines will likely find more anti-vax content than empirical medical research supporting vaccines.

Censoring anti-vax disinformation won’t address this problem. Even attempts at the impossible task of wiping anti-vax disinformation from the Internet entirely will put it beyond the reach of researchers, public health professionals, and others who need to be able to study it and understand how it spreads.

In a worst-case scenario, well-intentioned bans on anti-vax content could actually make this problem worse. Facebook, for example, has over-adjusted in the past to the detriment of legitimate educational health content: A ban on “overly suggestive or sexually provocative” ads also caught the National Campaign to Prevent Teen and Unwanted Pregnancyin its net.

Empowering Users, Not Algorithms

Platforms must address one of the root causes behind disinformation’s spread online: the algorithms that decide what content users see and when. And they should start by empowering users with more individualized tools that let them understand and control the information they see.

Algorithms like Facebook’s Newsfeed or Twitter’s timeline makes decisions about which news items, ads, and user-generated content to promote and which to hide. That kind of curation can play an amplifying role for some types of incendiary content, despite the efforts of platforms like Facebook to tweak their algorithms to “disincentivize” or “downrank” it. Features designed to help people find content they’ll like can too easily funnel them into a rabbit hole of disinformation.

That’s why platforms should examine the parts of their infrastructure that are acting as a megaphone for dangerous content and address that root cause of the problem rather than censoring users.

The most important parts of the puzzle here are transparency and openness. Transparency about how a platform’s algorithms work, and tools to allow users to open up and create their own feeds, are critical for wider understanding of algorithmic curation, the kind of content it can incentivize, and the consequences it can have. Recent transparency improvements in this area from Facebook are encouraging, but don’t go far enough.

Users shouldn’t be held hostage to a platform’s proprietary algorithm. Instead of serving everyone “one algorithm to rule them all” and giving users just a few opportunities to tweak it, platforms should open up their APIs to allow users to create their own filtering rules for their own algorithms. News outlets, educational institutions, community groups, and individuals should all be able to create their own feeds, allowing users to choose who they trust to curate their information and share their preferences with their communities.

Asking the Right Questions

Censorship by tech giants must be rare and well-justified. So when a company does adopt a categorical ban, we should ask: Can the company explain what makes that category exceptional? Are the rules to define its boundaries clear and predictable, and are they backed up by consistent data? Under what conditions will other speech that challenges established consensus be removed?

Beyond the technical nuts and bolts of banning a category of speech, disinformation also poses ethical challenges to social media platforms. What responsibility does a company have to prevent the spread of disinformation on its platforms? Who decides what does or does not qualify as “misleading” or “inaccurate”? Who is tasked with testing and validating the potential bias of those decisions?